Enterprise AI, D&D with ChatGPT, & DevBurgerOps

The episode's motto: "Keep it Up."

Lots of original content this week, starting with this prediction about “enterprise AI”:

I also made these since last episode:

Another go-round at figuring out playing D&D with ChatGPT. It went pretty well! Here’s my retro/analysis of it, and here’s the actual play session with inline commentary.

I was the guest co-host on this week’s Cloud Foundry Weekly where we discussed the new features in the Tanzu Application Service 6.0. We, of course, spent a lot of time on the generative AI beta stuff that’s included. We discuss other new features as well.

Software Defined Talk #462 - Lifting Code - This week, we discuss Matt Asay accusing OpenTufu of "lifting code" and recap the Google Next '24. announcements. Plus, we share some thoughts on camera placement and offer listeners a chance to get free coffee beans. You can also watch the unedited video.

Relative to yours interests

IT departments are blowing their cloud budgets - “Nearly three in four (72%) of the IT decision-makers polled reported that their company exceeded its set cloud budget in the most recent fiscal year. Among the areas experiencing an acceleration of cloud deployments are: applications/workloads in IT operations (54%); hybrid work (50%); software development platforms and tools (45%); and digital experiences (44%).” // vendor-sponsored // has ranking of causes.

Kubernetes community capitalizes on open source and AI synergies - Among AI talk, 451’s Kubernetes TAM: $1.46bn in 2023, growing to $2.85bn in 2028.

Substack Is Setting Writers Up For A Twitter-Style Implosion – Home With The Armadillo - Seems weird. // “New reporting from The Wrap details how Substack’s decision to implement a new “follow” feature — part of its transition from newsletter publishing platform to social media site — has tanked subscription growth for lots of newsletter writers.”

A simple model of AI and social media - “It is better to just start by admitting that the feed is fun, and informative, for many teenagers and adults too.” // I grew up in the Satanic Panic era, clowns kidnapping kids in vans around every corner, video games scrambling kid’s heads, skulls and boobs in ice-cubes, and of course fear of hip-hop. People actually thought that, across the country, there were Satanic cults trying to convert your children to worshiping the devil, or worse. It was in the news. A lot. I mean, you know [shrugs]: the 80s. So when I see people worrying about popular culture ruining The Kids, I’m immediately suspicious. // Also, I am part of the first generation of heavy Internet users (Gen-X), so I know what the analog world was like: from nothing, to beepers and pay phones, to alpha numeric pagers, the WWW, flip phones, laptops replacing desktops as the norm, WiFi, and then finally smart phones). The analog world sucked in comparison. iPhone 3G forever!

AI Corner

Next ‘24 - Google Cloud CEO on why an open AI platform with choice, one which allows you to differentiate, is essential - Enterprise AI as a feature.

‘This shit’s so expensive’: a note on generative models and software margins - “The fundamental problem with generative models is that they are 10x too expensive to work with the industry’s default business models and structure. Either these companies who are going all-in on 'AI”'need to fundamentally change everything about how they work – laying off a bunch of people won’t make ML compute 10x cheaper so they’d need to change the org to survive on razor-thin margins – or they need to discover some undefined magical way of lowering compute costs 10x. So far they’re opting for magic."

Counter-point: Notes on how to use LLMs in your product. - “Even the most expensive LLMs are not that expensive for B2B usage. Even the cheapest LLM is not that cheap for Consumer usage – because pricing is driven by usage volume, this is a technology that’s very easy to justify for B2B businesses with smaller, paying usage. Conversely, it’s very challenging to figure out how you’re going to pay for significant LLM usage in a Consumer business without the risk of significantly shrinking your margin”

The Art of Product Management in the Fog of AI - “before products launch, it’s critical to run the machine learning systems through a battery of tests to understand in the most likely use cases, how the LLM will respond.”

Amazon Isn’t Killing Just Walk Out But Rather “Pushing Hard” On It - Hmm, hopefully we’ll see some nuanced corrections in all them news outlets…

From the VP of Cable’s Mood Board, then/now, 2005/2024

Wastebook

“You can never have too many chickens.” This guy is amazing. Internet art at its finest.

“Meanwhile, I’m headed to Milwaukee this weekend to play pinball and get drunk. Perhaps I should rethink my life choices.”

“Keep it up.”

Word watch, “pink slime”: “Pink slime sites mimic local news providers but are highly partisan and tend to bury their deep ties to dark money, lobbying groups, and special interests.”

They’re like the Karens of Europe.

Mutually assured mansplaining.

“Nexit.” NL Politics.

News from the AI v. Human front: “You spend a week writing the 70 page doc only have [to] it sent back to you in 10 minutes. God help us.”

“Everyone did the best job they could, given what they knew at the time, their skills and abilities, the resources available, and the situation at hand.” Here.

Logoff

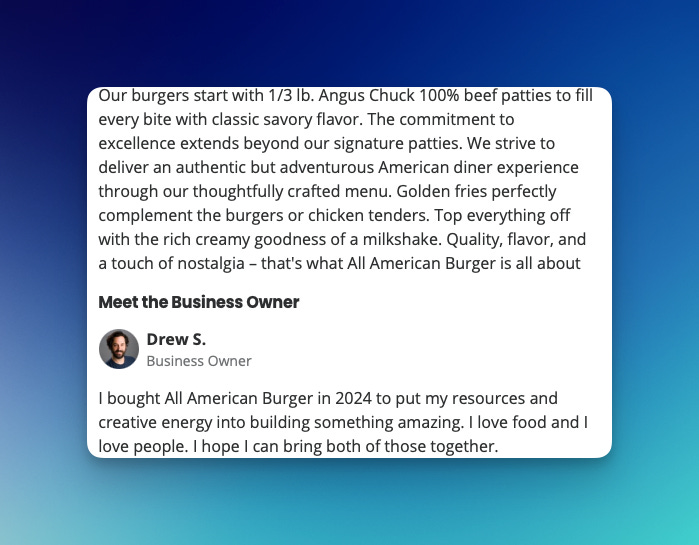

My old pal Andrew Shafer bought a burger place in Tucson:

I know! I bet they’re good burgers - too bad I don’t get ti Tuscon much.